Google Cloud Storage as Cache platform

In Apps script library with plugins for multiple backend cache platforms I covered a way to get more into cache and property services, and the bmCrusher library came with built in plugins for Drive, CacheService and PropertyService. Because these are designed as plug-ins we can add any number of back ends as long as they present the same interface methods. Now bmCrusher also has a built in plugin for Cloud Storage.

Benefits of using cloud storage

Aside from potentially being permanent, you can store much bigger volumes of data than the other platforms, and because the concept of compression and spreading the data across multiple physical items is all inherited from the Crusher service, we can minimize space used and avoid issues with upload size limitations. Most importantly, using cloud storage as the back end means you can share data across multiple projects.

How to use

Just as in the other examples, it starts with a store being passed to the crusher library to be initialized. The gcsStore is actually managed by another library, as described here which presents with the same methods as the CacheService, so we can simply reuse the built in CacheService plugin directly. In any case, the entire plugin is implemented in bmCrusher so you simply have to initialize it. This uses a service account to access the storage bucket, but you can also use the ScriptApp access token if its properly scoped. I’ll cover both options later.

From now on the exact same methods introduced in Apps script library with plugins for multiple backend cache platforms will now operate on Cloud Storage instead of the other platforms. Here’s a refresher

Writing

All writing is done with a put method.

put takes 3 arguments

- key – a string with some key to store this data against

- data – It automatically detects converts to and from objects, so there’s no need to stringify anything.

- expiry – optionally provide a number of seconds after which the data should expire.

Reading

get takes 1 argument

- key – the string you put the data against, and will restore the data to it’s original state

Removing

Expiry

The expiry mechanism works exactly the same as the other platforms. If an item is expired, whether or not it still exists in storage, it will behave as if it doesn’t exist. Accessing an item that has expired will delete it. Cloud storage also has lifecycle management. Any item that has an expiry (the default is for the item to be permanent), will be marked for lifecycle management by cloud storage and will eventlually be deleted, up to a day after it expires.

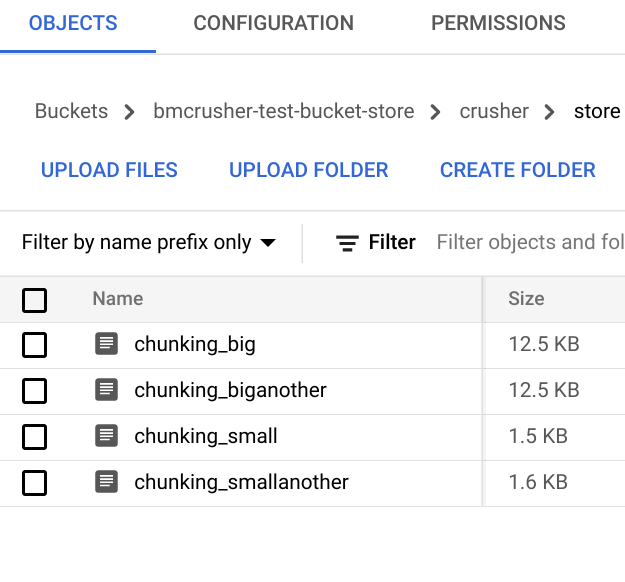

Here’s what some store entries look like on cloud storage

Fingerprint optimization

Since it’s possible that an item will spread across multiple physical records, we want a way of avoiding rewriting (or decompressing) them if nothing has changed. Crusher keeps a fingerprint of the contents of the compressed item. When you write something and it detects that the data you want to write has the same fingerprint as what’s already stored, it doesn’t bother to rewrite the item.

However if you’ve specified an expiry time, then it will be rewritten so as to update its expiry. There’s a catch though. If your chosen store supports its own automatic expiration (as in the CacheService), then the new expiration wont be applied. Sometimes this behavior is what you want, but it does mean a subtle difference between different stores.

You can disable this behavior altogether when you initialize the crusher.

Formats

Crusher writes all data as zipped base64 encoded compressed, so the mime type will be text, and will need to be read by bmCrusher to make sense of it, but watch this space – I’ll be adding an option to write vanilla data without the crusher metadata.

Setting up your storage bucket

Actually there’s not much to this. The simplest way is just to make sure you set up your cloud project in the same organization as your apps script project, go to storage, come up with a unique name for your storage bucket, and pass it over when initializing the store. I didn’t even have to reassign my apps script cloud project or enable any APIs

I did have to add these scopes to my apps script manifest though.

Which means that the script oauth token (passed over when initializing the store), is well enough scoped to do stuff with cloud storage. Here’s how to initialize the crusher service with ScriptApp providing the token.

but see below for a more flexible alternative, using a service account.

Using a service account rather than relying on ScriptApp

Although you can manually setup the required scopes in your appsscript.json manifest, it can be a little flaky as adding and removing libraries and otherwise doing things that modify your appscript kills the oauth scopes you’ve added manually, so I recommend that you use a service account to access the storage bucket. With goa it’s a piece of cake.

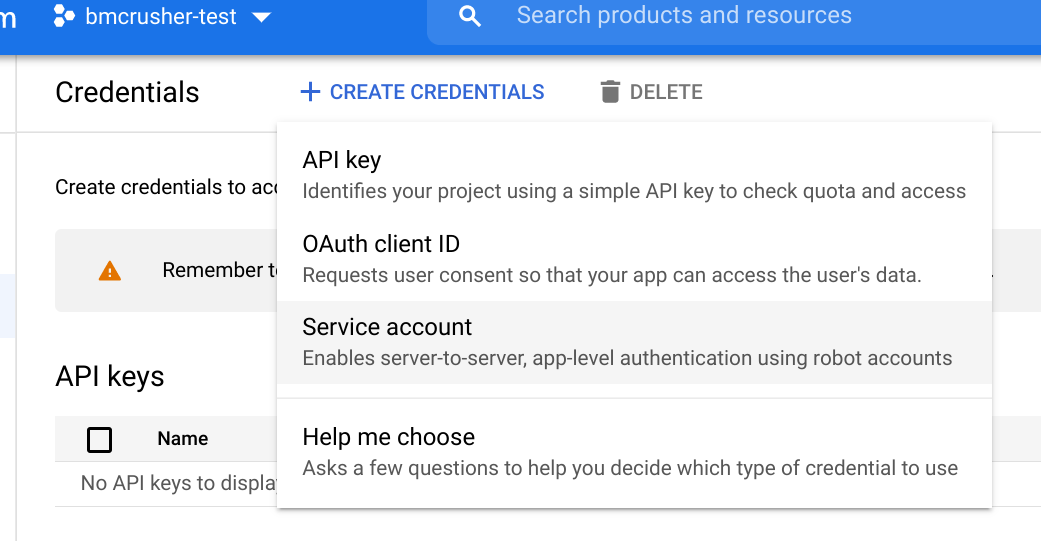

Create your service account

Go to the cloud console of the project that holds the bucket you want to use, and create a new service account.

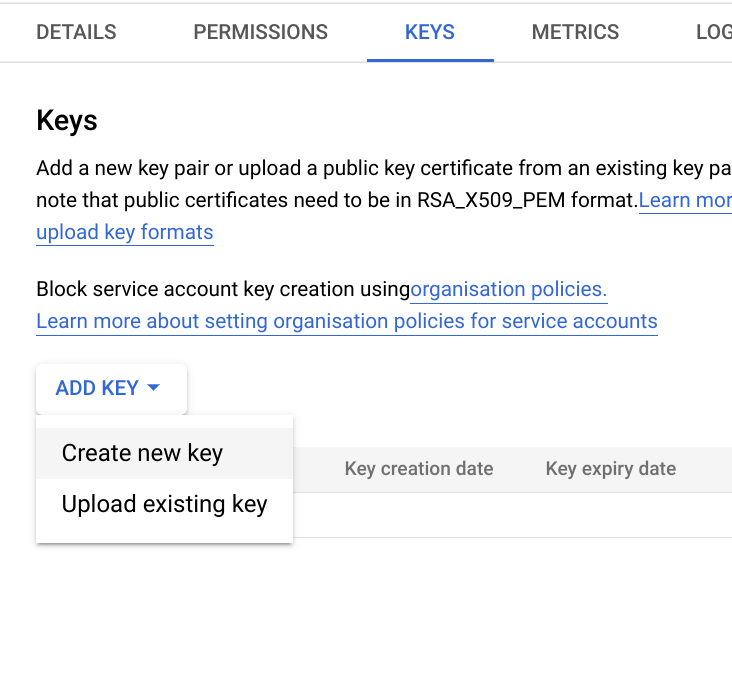

Create a service account key

Next create a key, give it access to the cloud storage admin role (it needs full control to be able to turn on lifecycle management on the bucket).

then download the json file containing the service account credentials to drive and take note of its file Id on Drive.

Initialise goa

This is a one off operation – create, run then delete this function (you won’t need it again), subsitituting in the the fileId of the service account file.

Initialize the crusher

This time instead of getting the access token from ScriptApp, we’ll get it from goa.

Plugin code

The plugin is implemented in bmCrusher, but here it is for anyone interested in writing their own plugin. Since the library it uses has the same methods as the CacheService, we can reuse the CacheServicePlugin for most of the work.

Links

bmCrusher

library id: 1nbx8f-kt1rw53qbwn4SO2nKaw9hLYl5OI3xeBgkBC7bpEdWKIPBDkVG0

Github: https://github.com/brucemcpherson/bmCrusher

Scrviz: https://scrviz.web.app/?repo=brucemcpherson/bmCrusher

cGcsStore

library id: 1w0dgijlIMA_o5p63ajzcaa_LJeUMYnrrSgfOzLKHesKZJqDCzw36qorl

Github: https://github.com/brucemcpherson/cGcsStore

Scrviz: https://scrviz.web.app/?repo=brucemcpherson/cGcsStore

cGoa

library id: 1v_l4xN3ICa0lAW315NQEzAHPSoNiFdWHsMEwj2qA5t9cgZ5VWci2Qxv2

Github: https://github.com/brucemcpherson/cGoa