Motivation

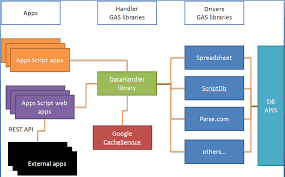

Caching is a great way to improve performance, avoid rate limit problems and even save money if you are accessing a paid for API from Apps Script. A big limitation though is that cache entries can only be up to 100k. One way of resolving this is to compress the data, and another is to split the data across multiple cache entries. This library does both.

I’ve provided a number of cache related libraries before on this site, but this one is purpose built and not part of some bigger project – so if you only want Apps Script caching – this is the one you need.

Getting started

You’ll need the bmCachePoint library – id details at the end of this post.

Where

- cachePoint : (optional) One of the Apps Script Cache services – if this is null, then no caching is done, but the class methods work but don’t do anything. This will allow you to easily test with and without cache

- expiry: (optional) default number of seconds for cache entries to live for

- prefix: (optional) any text – it will be used to partition entries from each other in cache – you probably won’t need this

Cache keys

You can provide anything as a cache entry key – typically, for an API this will be a url and possibly some options. Any prefix you provided earlier will be added in also to create a unique key for a cache entry

What if data is too big

You don’t need to care about the size of the data you are writing to cache. In fact you can’t even know, since all data is compressed before writing anyway. Just provide data as a string. If the compressed data is still too big for the maximum size the Cache permits it will automatically create multiple cache entries and recombine them when you retrieve the entry from cache

Setting data

Generally speaking you’ll just provide a key and data to the .set method. For api data the key would typically be the url. All data will be compressed and if necessary spread across multiple cache entries.

Advanced setting data

You can also enhance the key by providing an options params – this can be anything. To recover the cache entry data supply the same options and key to the get method. You can also change the default expiry time.

Getting data

You’ll usually just need the key. If there is no cache entry, you’ll get a null reponse. Data will be combined from multiple cache entries if required, and decompressed

Advanced getting data

If you provided an options parameter to enhance they key, you’ll need to specify it here too

Removing entries

You usually don’t need to bother removing items, as they will expire anyway. However, one example of where you might want to is if you know a cache entry is stale.

Advanced removing data

If you provided an options parameter to enhance they key, you’ll need to specify it here too

Links

bmCachePoint : 1GOjfvSZL31TxlF61QT1fVNIKnZw9UeqF_2tkPQ5D1n4BBth-yKc6AznH

Related

Caching, property stores and pre-caching

SuperFetch caching: How does it work?

Apps script caching with compression and enhanced size limitations

Caching across multiple Apps Script and Node projects using a selection of back end platforms

How to prevent unwanted web caching

Database caching

Proxy magic with Google Apps Script

Detect fake news with Google Fact Check tools

Convert any file with Apps Script