What is scraper wiki

Scraper wiki is an environment which allows you to put some structure around data published in web pages. In addition, it has an sqlLite datastore through which many users of scraperWiki make data from their endeavours available. In this blog post, I showed how to use the scraperWiki API to pull data about public projects published on scraperWiki. Now let’s look at getting data out of the scraperwiki datastore into Excel and Google Docs using the scraper wiki API. This page will cover the Excel implementation, with the Google Apps script covered here. However, as with all projects on this site, the implementations are as close as the language syntax allows. All code can be downloaded and is in the cDataSet.xlsm file.

You can scrape directly from VBA as well – see Regex, scraping and visualizing for an example

Before we get started on a walkthrough, this ends up being just a neat one liner to create a table in Excel from a given scraperWiki – it looks like this

Public Sub testScraperWikiData(shortName As String)

scraperWikiStuff shortName, "scraperwikidata"

End Sub

So we’ll assign a button to that, to ask which one you want

<pre>Public Sub testScraperWikiInput()

testScraperWikiData InputBox("shortname?")

End Sub</pre>

Scraping the scraper.

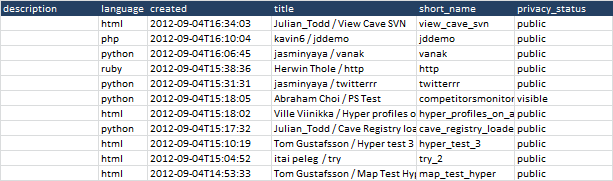

Using Rest to Excel library we can easily populate a page with all known scrapers. Here’s a snippet from a list of about 1000 below.

The short_name is the key to all scrapers in scraperWiki. Not all scrapers have valid data though, so first let’s take a pass through this table and check out which ones have. To do that, we use the API again, this time accessing the sqlLite database schema to see if there are any data tables. For this and for retrieving data we will use this new rest library entry

<pre> With .add("scraperwikidata")

.add "restType", erRestType.erSingleQuery

.add "url", "https://api.scraperwiki.com/api/1.0/datastore/sqlite?format=jsondict&name="

.add "results", ""

.add "treeSearch", False

.add "ignore", vbNullString

End With</pre>

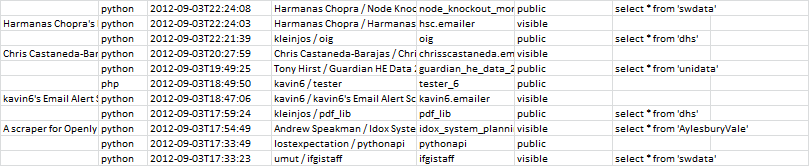

For simplicity we’ll add an extra column showing the default sql that would be needed to get data from the first data table in each scraperWiki’s datastore. That gives us something like this

Here’s a walkthrough of the code to do that –

Loop through each row in the scraperWiki directory and construct a default sql statement for those rows that have a dataStore with a table in it

<pre>Public Sub swSeewhatworks()

Dim ds As New cDataSet, dr As cDataRow

ds.populateData wholeSheet("scraperwiki"), , , , , , True

For Each dr In ds.rows

dr.Where.Resize(, 1).Offset(, dr.columns.count).value = _

swGetDefaultTableSql(dr.toString("short_name"), False)

Next dr

Set ds = Nothing

End Sub</pre>

Get any tables that exist for this shortname, and construct an sql

<pre>Private Function swGetDefaultTableSql(shortName As String, Optional complain As Boolean = True) As String

' this will look up to see what tables are defined in a given scraperwiki

Dim s As String, cr As cRest

Set cr = swGetTables(shortName)

If cr Is Nothing Then

MsgBox ("could get info on " & shortName)

Else

If cr.jObject.hasChildren Then

' this is hokey - for the moment just take from the first table found

swGetDefaultTableSql = "select * from '" & _

cr.jObject.children(1).child("name").toString & "'"

Else

If complain Then MsgBox ("could not find any valid tables for " & _

shortName & "(" & cr.jObject.serialize & ")")

End If

End If

End Function</pre>

Access the sqlLite datastore using the API and see what tables we can find.

<pre>Private Function swGetTables(shortName As String) As cRest

Const tableDirectory = "SELECT name FROM sqlite_master " & _

"WHERE type IN ('table','view') AND name NOT LIKE 'sqlite_%'" & _

"Union all " & _

"SELECT name FROM sqlite_temp_master " & _

"WHERE type IN ('table','view') " & _

"ORDER BY 1"

' lets see if we can get the tables that exist in this shaperwiki

Set swGetTables = restQuery(, "scraperwikidata", _

shortName & "&query=" & tableDirectory, , , , , False)

End Function</pre>

Getting the data

Now we’ve analyzed the whole directory for scrapers with any data, we can pick one and execute it

<pre>Public Sub testScraperWikiInput()

testScraperWikiData InputBox("shortname?")

End Sub</pre>

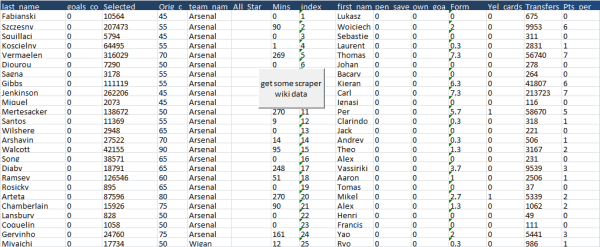

For the purposes of this test I’ve chosen something at random called ‘fantasy_premier_league_player_stats_4’, and here’s a snippet of the data it returns

You can get the code at this gist , but you it is also contained in cdataSet.xlsm, which you can download to include all the needed libraries

scraperWiki is a very powerful tool with which you can create your own datasets, as well as access public datasets of others, and get them into Excel or see Scraperwiki data to Google Docs.

Take a look at how the Excel Rest Library for more like this.