Another quick demo of data sharing

There are many ways to accomplish this of course, but using Caching across multiple Apps Script and Node projects using a selection of back end platforms is pretty fast to set up. To test this, here’s a challenge that shares the data in a spreadsheet with node, set up end to end in less than 5 minutes. This example is almost exactly the same as Sharing data with Upstash between Node and Apps Script – end to example in 5 minutes , and Sharing data between Apps Script and Node using Github back end – 5 minute example except this time, instead of using Upstash (redis) or GitHub – we’re going to use a Google Cloud Storage. Cloud storage allows a much bigger payload so this is a good choice (as it there will be less splitting across multiple records), but it does mean you have create a Cloud project and enable billing etc (although the free tier is very generous).

Plan

- GCS as the back end.

- bmPreFiddler to deal with the data in the spreadsheet

- bmcrusher-node as the node client

- scrviz to quickly find the apps script library credentials

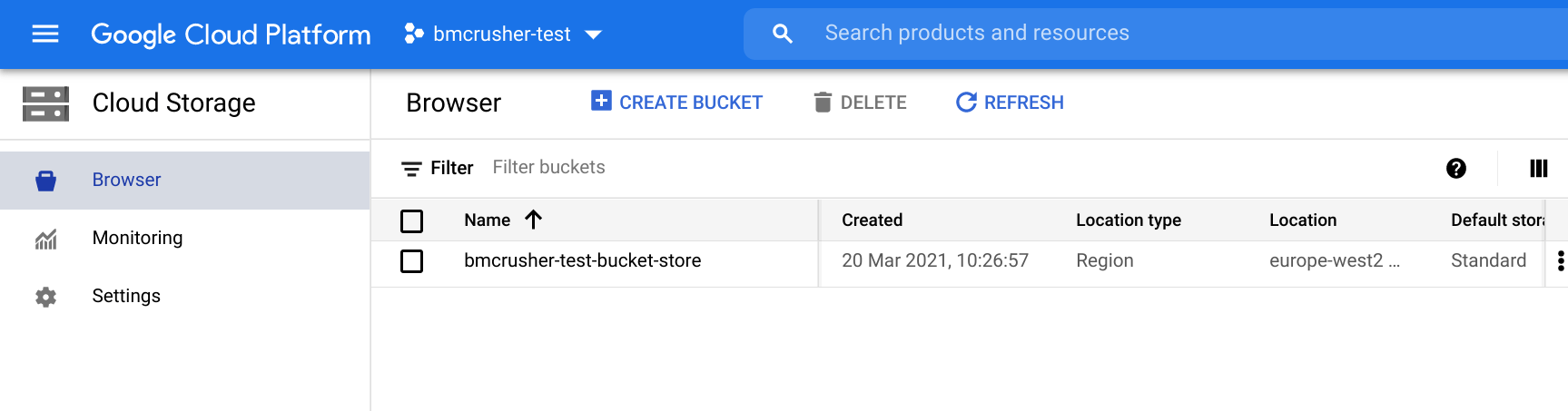

GCS credentials

Assume you’ve set up a service account for the Apps Script version. If not take a look at Google Cloud Storage platform cache plugin for Apps Script crusher. You can use the same service account for the Node end. You’ll also need to create up a cloud storage bucket in the cloud console to use for your store.

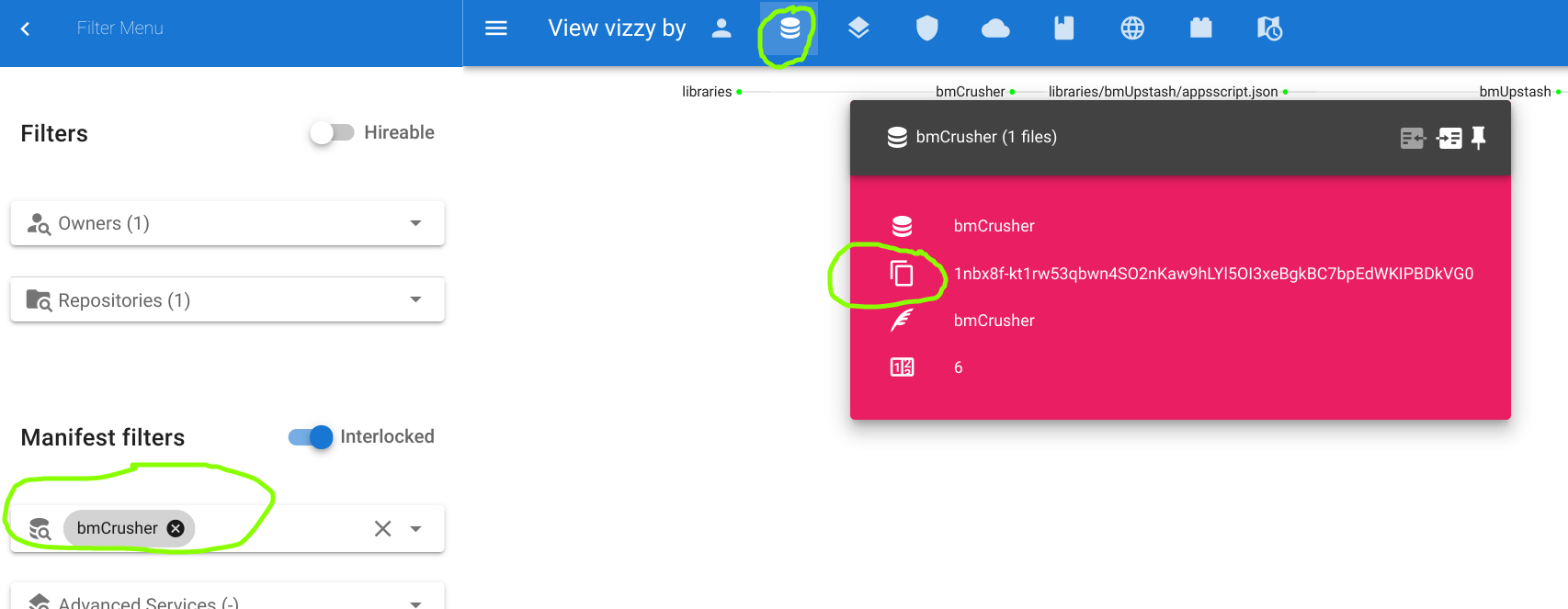

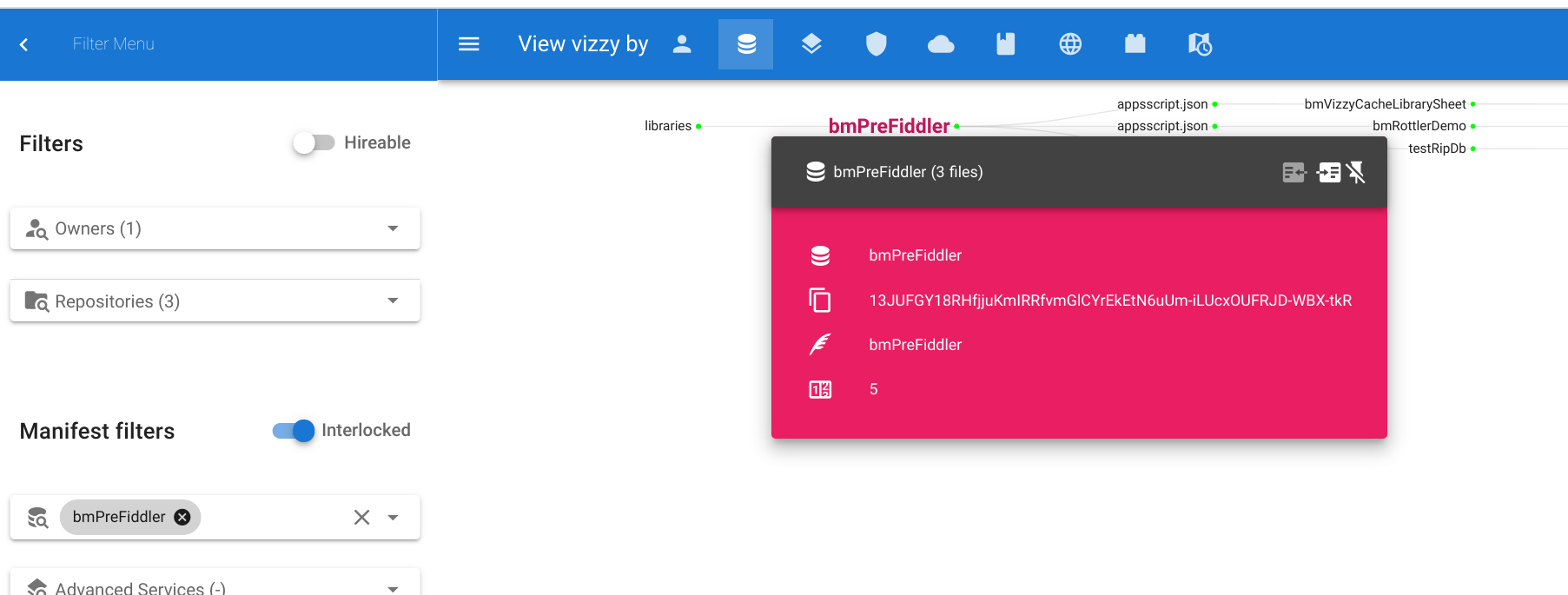

Create an apps script project, and find and add the necessary libraries

We can use scrviz to quickly find the library keys, which are normally a pain to find

- find and add bmCrusher

- find and add bmPreFiddler

Oauth2 for Apps Script

You’ll also need to add the goa library and setup the credentials. See Google Cloud Storage platform cache plugin for Apps Script crusher for how to do that.

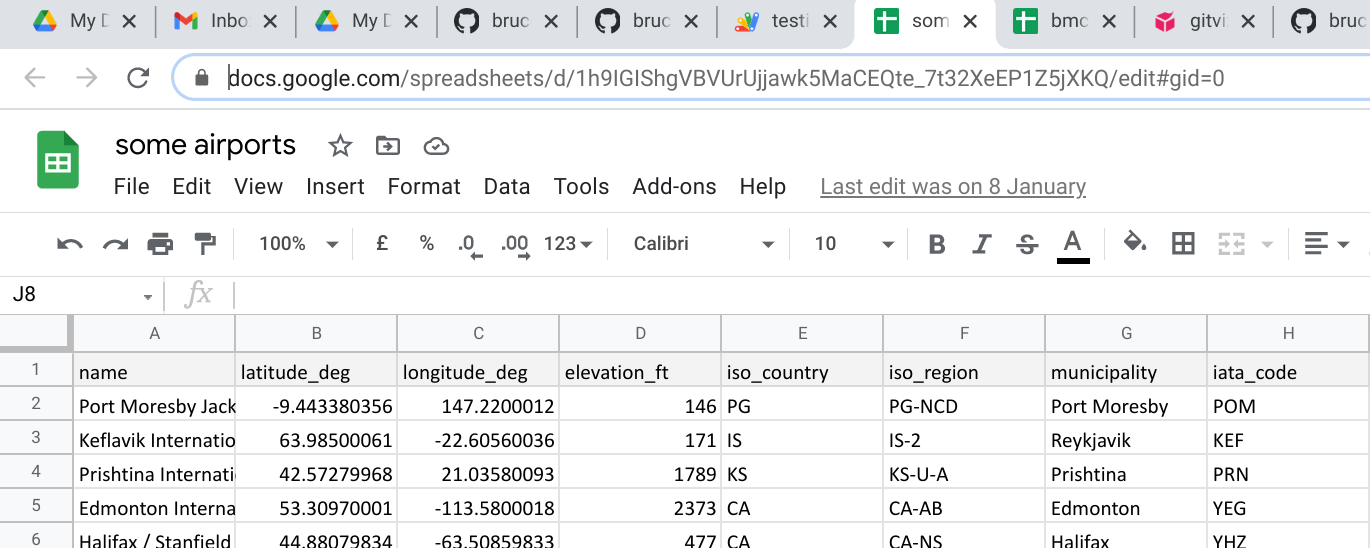

Source data

We’re going to get a sheet from this spreadsheet that contains a bunch of stuff about the main airports worldwide.

so all we need is the spreadsheetId and the name of the sheet, get the data, and write to the upstash store.

Code for getting spreadsheet data and writing to cache

Note that the only difference between backends is how they are initialized. And that’s all there is on the Apps Script side.

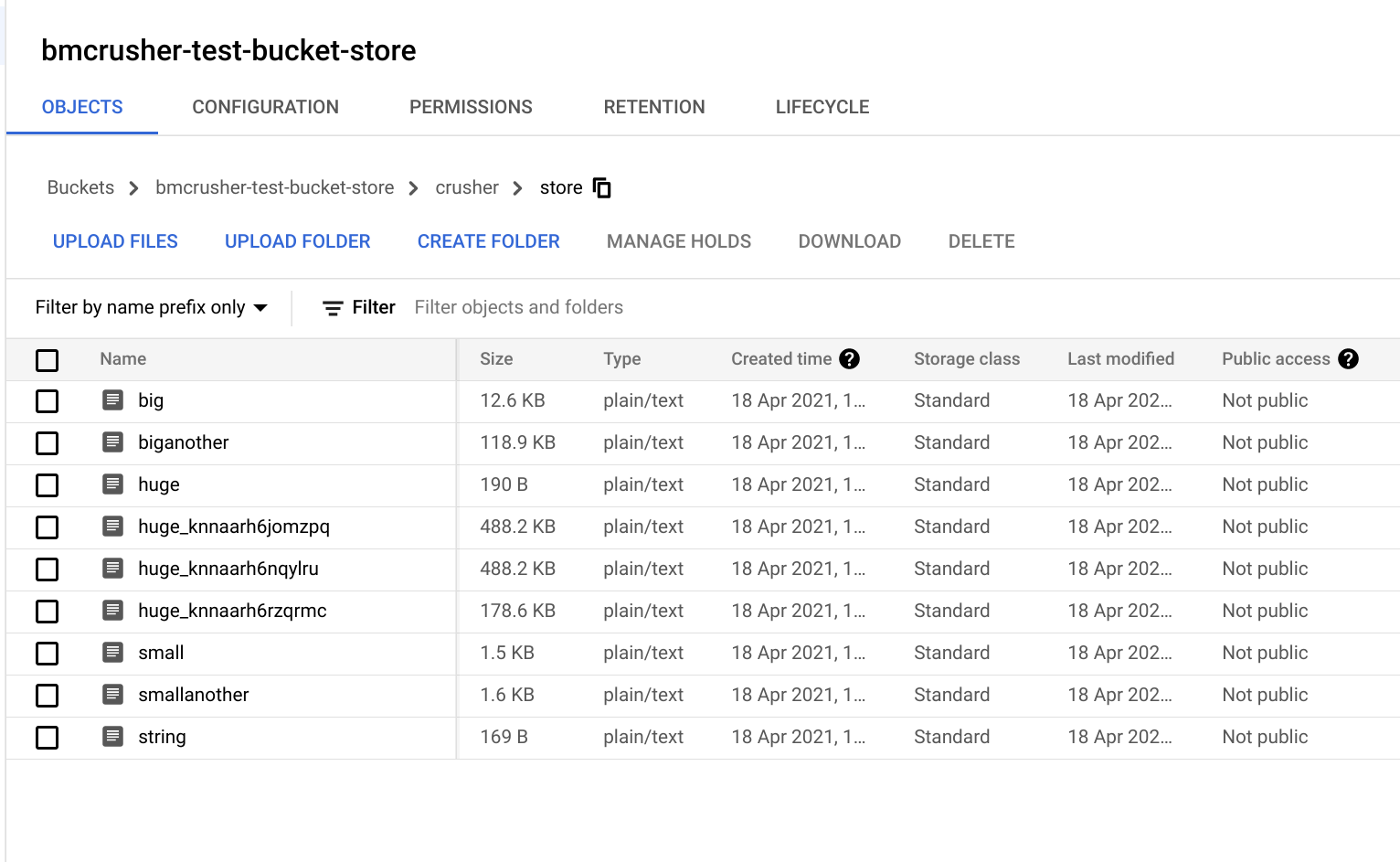

Cloud storage

Using the bucket you set up in the cloud storage console, here’s how it looks with some crusher data in it. For demonstration, I used a smaller chunksize so it would split up the object into multiple chunks, but using the plugin’s default chunksize will make it unlikely that the data will overflow into multiple chunks. In any case, that all happens behind the scenes and you don’t need to care about it.

Node client

The node client is even briefer, but first install the bmcrusher-node module from npm using either npm or yarn

Here’s the code

I’m picking up the service account from a file. This is the same service account .json file you would have downloaded to drive for the Apps Script end. There are a number of strategies in Node to handle Google Cloud credentials, so pick the one you are most comfortable with

And we’re done – in less than 5 minutes –

Of course you could process the data in some way if you want, and write it back for Apps Script to pick up, clean up the store etc.

Links

scrviz Apps Script bmCrusher: https://scrviz.web.app?repo=brucemcpherson%2FbmCrusher

scrviz Apps Script bmPreFiddler: https://scrviz.web.app?repo=brucemcpherson%2FbmPreFiddler

scrviz Apps Script cGoa: https://scrviz.web.app?repo=brucemcpherson%2FcGoa

github bmcrusher-node: https://github.com/brucemcpherson/bmcrusher-node