In My server times are out of sync! I showed a formula to smooth the time differences between multiple servers when working in a collaborative environment, but I started not to believe the results so set out to validate them.

Development environment

I use cloud9 for development and very nice it is too. However, I’m working on push notification for Ephemeral Exchange where multiple servers are involved in writing and monitoring changes, and I’ve discovered that their server times are very poorly synchronized.

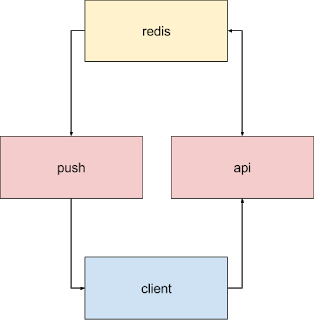

For development, my push and api servers are on cloud9 and the redis servers are on Google compute engine in a docker container. For production, the api server is on Google App engine flexible environment docker container, and the push and redis servers are on Google compute engine, also dockerized.

The problem

All of these components have different ideas of what the time is. I use the API server as master of all times – so when an item is created the creation time is that of the api server. The push server gets to know about changes by subscribing to a redis channel which publishes notifications when it detects a change.

A client subscribes to push notification via the API. If the api server is not at the same time as the push server then notifications coming from redis might not be selected because if the push server’s time is ahead of the api server’s time, it could look like they were made earlier than the subscription actually happened.

On Cloud 9, I found there was a 24 second discrepancy between the push and api server. Here’s the time on two servers checked at more or less the same time (I had to swap between tabs which took a few seconds).

Push server

$ date

Sun May 7 08:52:47 UTC 2017

Api server

$ date

Sun May 7 08:53:05 UTC 2017

A solution

The most accurate way would have been to include a timestamp on each modification in each item, and read it from redis on each change notification. That would have worked, except that

- Because I am monitoring deletes and expirations as well as updates, the items would have been deleted by the time the notification came.

- Some Redis operations used such as ZADD don’t give the opportunity to add a timestamp.

- I would not be able to monitor changes made by means other than the API.

Here’s what I came up with in the end

- The push server publish periodic requests for a time synch using a redis channel.

- The api server subscribes to that channel and reacts to messages by writing an item to redis

- The push server is subscribed to redis changes, and receives a notification when a time sync item is written

This gives me the following information

Using the push server clock

- now – when it has received a push notification for redis

- requestAt – when the push server asked for a timesynch check

- now – requestAt – elapsed time for the entire process

Using the api clock

- writtenAt – when the api server received the request.

Deducing

- If the clocks were at the same time, then writtenAt would be, requestAt + the network delay (the maximum of which will be approximately (now – requestAt) / 2

- The clock time error should be writtenAt – now – (now – requestAt)/2

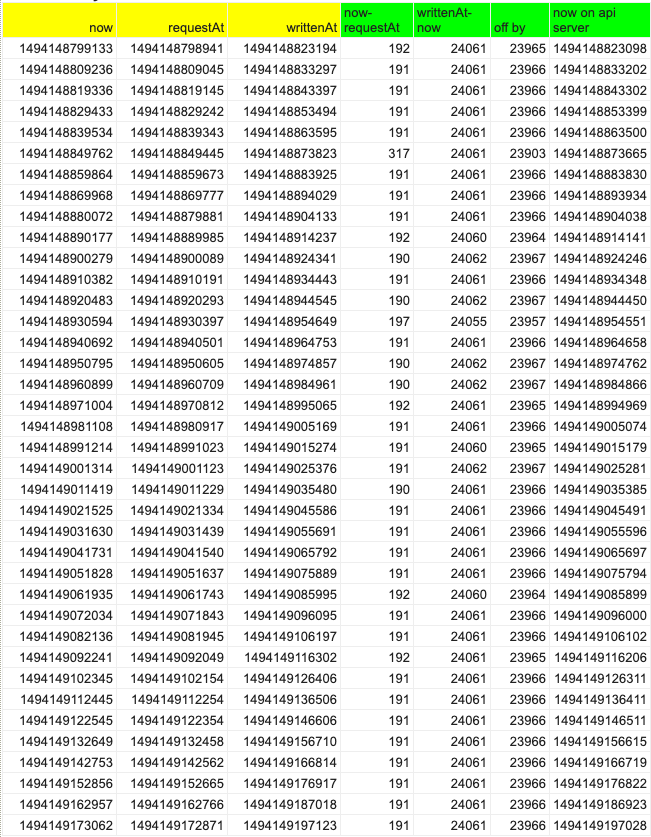

Let’s plug in some observed numbers, (which actually came out extremely consistently)

So this tells me the api server is running 24.2 secs ahead of the push server – compare that with my manual observation from earlier, and adjusting for the few seconds it took me to change tabs between them that looks about right.

Push server

$ date

Sun May 7 08:52:47 UTC 2017

[su_spaver size=”10″]Api server

$ date

Sun May 7 08:53:05 UTC 2017

Using that offset

Now I know that each event I log on the push server typically happens (API server time) about 24 seconds from now (Push server time), so I simply log the event with the adjusted time. I’ll keep that timeoffset up to date by applying the formula from My server times are out of sync! to smooth it out and all should be well.

Does it work?

Here are some observations comparing the real creation time stamped in the record, and the estimated creation time using this method – typically less than 20ms out – so that’ll do just fine.

| created | estimate | values – estimate |

| 1494235792603 | 1494235792621 | 18 |

| 1494235792596 | 1494235792619 | 23 |

| 1494235792608 | 1494235792624 | 16 |

| 1494235792604 | 1494235792622 | 18 |

| 1494235792613 | 1494235792626 | 13 |

| 1494235792614 | 1494235792627 | 13 |

| 1494235792616 | 1494235792628 | 12 |

| 1494235792612 | 1494235792625 | 13 |

| 1494235792694 | 1494235792692 | -2 |

| 1494235792707 | 1494235792718 | 11 |

| 1494235792713 | 1494235792725 | 12 |

| 1494235792705 | 1494235792717 | 12 |

| 1494235792698 | 1494235792713 | 15 |

| 1494235792696 | 1494235792712 | 16 |

| 1494235792773 | 1494235792772 | -1 |

| 1494235792708 | 1494235792719 | 11 |

| 1494235792828 | 1494235792832 | 4 |

| 1494235792711 | 1494235792724 | 13 |

| 1494235792880 | 1494235792879 | -1 |

The code

ns.synchTime = function() {

// I'm using recursive setTimout rather than setInterval

function sched () {

pub()

.then(function() {

return Useful.handyTimer(ns.settings.redisSyncFrequency);

})

.then(function() {

sched();

});

}

// publish a timesync request

function pub() {

return redisSyncPub_.publish(ns.settings.redisSyncChannel, JSON.stringify({

requestAt: new Date().getTime(),

syncId: syncId,

expire:ns.settings.redisSyncFrequency * 2

}));

}

// the first one will generate misleading initialization times, so it'll be ignored

pub().then(function () { sched() });

};

And listen for events, simply by extending the current listening algo to include synch items ( I won’t go into the detail of this here as it’s covered elsewhere).

else if (db === st.db.ts && method === "set" && message.slice(0, st.syncPrefix.length) === st.syncPrefix) {

ns.synchTimeUpdate (message);

}

Here’s the smoothing of each observation. Note that it discards the first one (because of the initialization anomaly), but it also uses a smoothed latency for its calculation and discards observations with extreme or unusual latencies to avoid skewing by network blips. I’m finding that the typical redis write to keyspace notification latency in this configuration (redis in a docker container on a google compute engine, push and api servers on cloud 9) is about 190ms.

ns.synchTimeUpdate = function (message) {

var now = new Date().getTime();

// will look something like this '__keyevent@6__:set sy-.1bfgvorjj.1494145143663'

var s = message.split (".");

var sId = s[1];

// check this is for me

if (sId !== syncId) {

console.log ("received a synch not for me", sId , syncId);

return Promise.resolve(null);

}

// now get the item so the times can be processed

return redisSync_.get (message)

.then (function (res) {

// convert to an object

var ob = Useful.obify (res).ob;

// end to end time

var latency = (now - ob.requestAt);

// smooth the observations

// ignore completely outlying latency as it could be a network blip

if (latency < ns.settings.redisSyncNetWorkOutlier && (!timeLatency || latency < timeLatency * 10) ) {

// api server is golden for time,

// ignore the first one because it'll be misleading since it probably did some initialization

if (timeNobs) {

timeLatency = timeNobs > 1 ? latency * smooth + timeLatency * (1 - smooth) : latency;

var offBy = ob.writtenAt - now - timeLatency/2;

timeOffset = timeNobs > 1 ? offBy * smooth + timeOffset * (1 - smooth) : offBy;

}

timeNobs++;

}

});

};

and the smoothing in action with a few observations. I could probably make the smoothing a little more reactive to more recent numbers by increasing it, but these are very stable numbers, so I’ll leave it for now.

| now | error | smoothed |

| 1494161572358 | 24259 | 24259 |

| 1494161582459 | 24154 | 24248 |

| 1494161592565 | 24154 | 24239 |

| 1494161602669 | 24154 | 24230 |

| 1494161612772 | 24154 | 24223 |

| 1494161622869 | 24155 | 24216 |

| 1494161632973 | 24154 | 24210 |

| 1494161643071 | 24154 | 24204 |

| 1494161653175 | 24154 | 24199 |

| 1494161663280 | 24154 | 24194 |

| 1494161673383 | 24154 | 24190 |

| 1494161683488 | 24154 | 24187 |

| 1494161693585 | 24154 | 24183 |

| 1494161703690 | 24154 | 24180 |

| 1494161713798 | 24153 | 24178 |

| 1494161723901 | 24155 | 24175 |

| 1494161734003 | 24154 | 24173 |

| 1494161744108 | 24152 | 24171 |

| 1494161754209 | 24155 | 24169 |

On the API server

ns.synchInit = function () {

// the push server will ask me to connect

return redisSyncSub_.subscribe(ns.settings.redisSyncChannel)

.then(function(count) {

if (!count) console.log ('failed to subscribe to ', ns.settings.redisSyncChannel);

// every time I get one of these, I'll write something

return redisSyncSub_.on('message', function(channel, message) {

var ob = Useful.obify (message).ob;

ob.writtenAt = new Date().getTime();

return redisSync_.set ([ns.settings.syncPrefix,ob.syncId,ob.writtenAt].join("."), JSON.stringify(ob),"EX", ob.expire)

.then (function (r) {

if (!r)console.log ("failed to write sync item",r,ob.syncId);

});

});

});

};

Using the offset

ns.logEvent(method, message, "redis", now + timeOffset );

For more like this, see React, redux, redis, material-UI and firebase.

Why not join our forum, follow the blog or follow me on Twitter to ensure you get updates when they are available.