Update Nov2017

UPDATE: parse.com as now been closed and moved to parseplatform.org. I will update the content of this page an move the back-end to this platform

We’ve looked at Query Limits on parse.com and Google Visualization API data to prepare for loading the color table from Playing around with GAS color into parse.com. Now to load the data

One of the constraints of parse is that it’s rate limited. That means that we want to minimize the writing that we do, especially with a large data set like this that might need regular batch updating. I’ve decided therefore to

- Get the current contents of the parse table

- Get the Google Apps Data

- Look to see whats missing/changed from the parse table. I’ve decided not to delete anything that exists in the parse table but not in the Google Apps Workbook. So the workbook can be considered to be an incremental update, rather than a replacement.

This way, we’ll minimize the amount of back and forwards to parse.

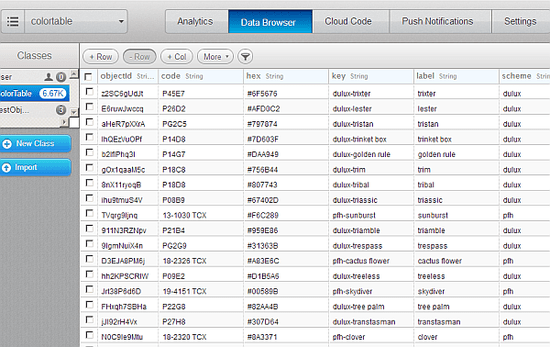

Here’s what the data looks like in the data browser once loaded.

Here’s the whole application. All the components are async, so we’re heavily using promises to bring it all back together again.

<!DOCTYPE HTML>

<html>

<head>

<title></title>

<link rel="stylesheet" type="text/css" href="css/colortable.css">

<script src="http://www.parsecdn.com/js/parse-1.2.2.min.js"></script>

<script src="js/colortable.js"></script>

<script type="text/javascript" src="http://www.google.com/jsapi"></script>

<script type="text/javascript">

google.load('visualization', '1');

google.load("jquery", '1');

</script>

<script type="text/javascript">

google.setOnLoadCallback(function () {

// now we can start....

doTheWork();

});

// get data from google apps, and all data currently in parse.

function doTheWork () {

// get the data from google apps script - async

var dataFromGoogle = getColorTable();

dataFromGoogle.fail(

function(error) {

$("#data").text("error getting google docs data " + error);

});

//get current parse data for scheme - async

var scheme = "";

var parseKeys = getParseKeys();

Parse.initialize(parseKeys.appId, parseKeys.jsKey);

var ColorTable = Parse.Object.extend("ColorTable");

var schemeFromParse = schemePromise(ColorTable, scheme);

schemeFromParse.fail(function (error) {

$("#scheme").text("error getting scheme " + scheme + JSON.stringify(error));

});

// now we can get going

schemeFromParse.done(function (all) {

$("#scheme").text("scheme " + scheme + " returned " + all.length);

dataFromGoogle.done(function(data) {

$("#data").text("data back from gapps");

// start work...

all.sort(obCompare);

var dataToParse = updateParse(all,data,ColorTable);

// wait till all done

$.when.apply($,dataToParse).then (

function (obs) {

$("#save").text( dataToParse.length + " batches saved" );

},

function (errors) {

$("#save").text(JSON.stringify(error));

});

});

});

}

// used to compare for sorting

function obCompare(a,b) {

if (a.get("key") < b.get("key"))

return -1;

if (a.get("key") > b.get("key"))

return 1;

return 0;

}

function updateParse (all,data,model,maxLoad) {

// we have all the current contents of parse and the data from google

// now update or insert the data records where there's been a change

var nr= maxLoad || data.getNumberOfRows()-1 ,nc = data.getNumberOfColumns();

var promises =[];

var batch = [], batchSize = 400;

//find the headings

var colHeaders = {};

for (var i = 0; i < nc; i++) {

colHeaders[data.getColumnLabel(i).toLowerCase()] = i;

}

//now traverse the data and write it as parse models

for (var i = 0 ; i < nr; i++) {

var key = data.getValue(i,colHeaders.name).toString();

var ob = new model(

{key: key,

scheme : data.getValue(i,colHeaders.scheme).toString(),

label : data.getValue(i,colHeaders.label).toString(),

code : data.getValue(i,colHeaders.code).toString(),

hex : data.getValue(i,colHeaders.hex).toString() } ) ;

var a = findInParse (all,ob);

var dirty = true;

if (a) {

// has it changed ?

dirty = false;

for (var k in ob) {

dirty = dirty || ob.get(k) != a.get(k);

}

}

// write or update?

if (dirty) {

if (batch.length >= batchSize) {

promises.push (throttleWrite(batch));

batch = [];

}

batch.push(ob);

}

}

if (batch.length) promises.push (throttleWrite(batch));

return promises;

}

// write throttled

function throttleWrite (batch) {

var promise = $.Deferred();

var throttleTime = 1000;

setTimeout(function () {

Parse.Object.saveAll( batch, {

success: function(obw){

console.log ("wrote batch of " + obw.length);

promise.resolve (obw);

},

error: function(error){

console.log ("failed batch of " + obw.length + "-" + JSON.stringify(error));

promise.reject (error);

}

});

}, throttleTime);

return promise.promise();

}

// see if we have it already..

function findInParse (all,ob) {

return binarySearch(all,ob,0,all.length-1,obCompare);

}

function binarySearch(things, thing, mini, maxi, compare) {

if ( mini > maxi ) return null;

var midi = parseInt((mini + maxi) / 2);

var cmp = compare (thing,things[midi]);

if (cmp) {

return binarySearch (things,thing, cmp > 0 ? midi+1 : mini , cmp < 0 ? midi - 1 : maxi, compare);

}

else

return (things[midi]) ;

}

// get data from google apps

function getColorTable() {

var deferred = $.Deferred();

var workBook = 'http://docs.google.com/spreadsheet/tq?key=0At2ExLh4POiZdGFnVFJEQkpRSXZWYUF2WmF6ZUliU3c';

var sheet ='&sheet=colorTable';

var headers = '&headers=1';

var query = new google.visualization.Query(workBook + sheet + headers);

// get 1st 5 columns

query.setQuery('SELECT A,B,C,D,E options no_format');

// assume its big

query.setTimeout(200);

// Send the query with a callback function.

query.send(function (response) {

if (response.isError())

deferred.reject('Error in query: ' + response.getMessage() + ' ' + response.getDetailedMessage());

else

deferred.resolve(response.getDataTable());

});

return deferred.promise();

}

// get the data for chosen scheme from parse

function schemePromise(model, scheme, allResults, allPromise) {

// find a scheme at a time

var promise = allPromise || $.Deferred();

findChunk(model, scheme, allResults || [])

.done(function (results, allOver) {

if (allOver) {

// we are done

promise.resolve(results);

} else {

// may be more

schemePromise(model, scheme, results, promise);

}

})

.fail(function (error) {

promise.reject(error);

});

return promise.promise();

}

// return in chunks to the parse.com limit

function findChunk(model, scheme, allData) {

// we have to find in chunks since there is a limit on query size

// will return a promise

var limit = 1000;

var skip = allData.length;

var findPromise = $.Deferred();

var query = new Parse.Query(model);

if (scheme) query.equalTo("scheme", scheme);

query

.limit(limit)

.skip(skip)

.find()

.then(function (results) {

findPromise.resolve(allData.concat(results), !results.length);

}, function (error) {

findPromise.reject(error);

});

return findPromise.promise();

}

</script>

</head>

<body>

<div id="scheme"></div>

<div id="data"></div>

<div id="save"></div>

</body>

</html>

Some Gotchas

I already covered the query limits. To avoid rate throttling on Writes, I waited a bit between saves, and used Parse.Object.saveAll(), instead of object.save() to be able to batch up writes together.

Aside from being a bit fiddly because of the asynchroncity, and the few tweaks, it was all pretty straightforward. Now for the GAS/ScripDB version of loading.

For more on parse.com see Parse.com

For help and more information join our forum, follow the blog or follow me on Twitter