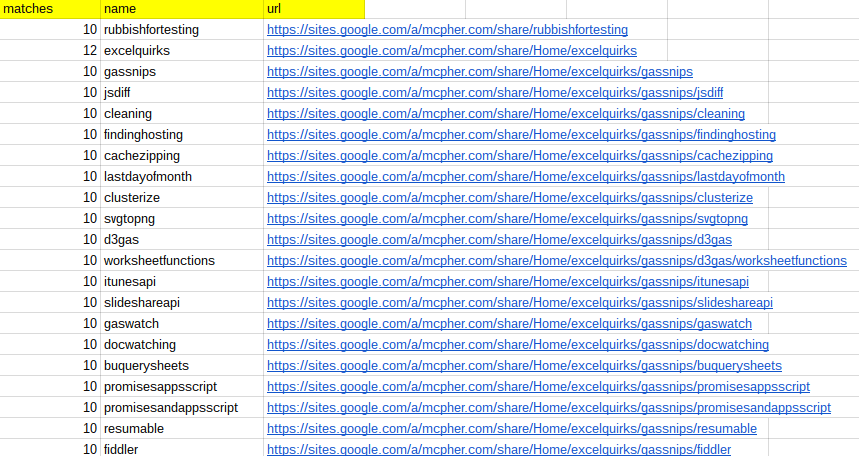

- which pages are using it

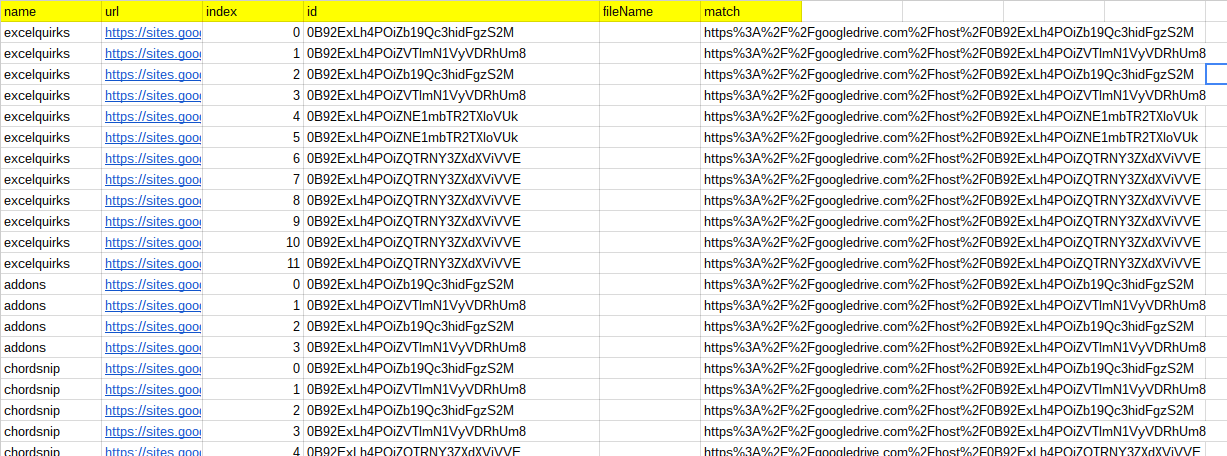

- which files are being referenced

Following on from that, I may automate the replacement with alternative hosting, but for this post – here’s how to get a report from your site of where you are using Drive hosting. It finds googledrive.com/host/id style. If you are hosting gadgets on drive, then the url will be encoded, so it also finds them too.

After running this, you’ll next want to go to Identifying hosted files

TODO

There are unsolved (maybe unsolvable) problems with rewriting html to Sites – specifically it can break gadgets and will eliminate any of the deprecated Adsense gadgets (if you still have them on your site), so I’ll need to figure those out (if I can) before publishing an automated update solution.

The damage

Here’ s the damage I need to sort out on this site

753 pages in site

459 pages in site with hosting

2773 hosting changes need to be made

The report

You get 2 reports

One that has a summary of the pages needing attention.

And another that has the detail of all the matches needing attention. The fileName will be populated if the hosting pattern involving a folder and file name is used, instead of the more common file id only.

cUseful library

I’ll be using the cUseful library, specifically this techniques.

Here’s the key for the cUseful library, and it’s also on github, or below.

Mcbr-v4SsYKJP7JMohttAZyz3TLx7pV4j

Settings

It starts with the settings, which look like this

var Settings = (function(ns) {

ns.sites = {

search:"", // enter a search term to concentrate on particular pages

siteName:"share", // site name

domain:"mcpher.com", // domain

maxPages:0, // max pages - start low to test

maxChunk:200 // max children to read in one go

};

ns.report = {

sheetId:"19kXFMUh0DNTde_qtvsZ6FH4dAJ4YDCJhIJroLNT3vGY", // sheet id to write to

sheetName:'site-' + ns.sites.domain + '-' + ns.sites.siteName, // sheetName to write to

sheetHosting:'hosting-' + ns.sites.domain + '-' + ns.sites.siteName

};

return ns;

})(Settings || {});

The code

Unfortunately, the getAllDescendants() method of sites doesn’t work beyond a certain depth. I didn’t bother to check why, so I’m just recursing through the pages instead, which sweeps up all the pages in the site.

It’s on GitHub, or below, or copy of developing version here. You’ll need the settings namespace at the beginning of this post too and of course the cUseful library reference.

/**

* look for places that hosting is being used in site pages

*/

function lookForHosting() {

var se = Settings.sites;

var sr = Settings.report;

var fiddler = new cUseful.Fiddler();

// get your site

var site = SitesApp.getSite(se.domain,se.siteName);

var pages = getAllPages (site);

// getalldescendants doesnt work properly, so I need to do this as a tree

function getAllPages (root, pages) {

// store the pages here

pages = pages || [];

// get all the children taking account of max chunks and pages so far

for (var chunk, chunks = [];

(!se.maxPages || pages.length + chunks.length < se.maxPages) && (chunk = cUseful.Utils.expBackoff(function (d) { return root.getChildren({ start:chunks.length, search:se.search, max:se.maxPages ? Math.min(se.maxPages - pages.length + chunks.length, se.maxChunk) : se.maxChunk }) }) ).length; Array.prototype.push.apply (chunks , chunk)) {} // recurse for all the children of them. chunks.forEach (function (d) { pages.push (d); getAllPages (d , pages); }); return pages; } Logger.log(pages.length + ' pages in site'); // find those with hosted stuff var work = pages.map(function (d) { // get the content var html = cUseful.Utils.expBackoff ( function () { return d.getHtmlContent(); }); // set up the regex for execution var rx = /https(?:(?:%3A%2F%2F)|(?:\:\/\/))googledrive\.com(?:(?:%2F)|(?:\/))host(?:(?:\/)|(?:%2F))([\w-]{8,})(\/[\w\.-]+)?/gmi // store the matches on this pages var match,matches=[]; while (match = rx.exec(html)) { matches.push ({ id:match[1], fileName: match.length > 2 && match[2] && match[2].slice(0,1) === "/" ? match[2].slice(1) : "",

match:match[0]

});

}

// the description of the match

return matches.length ? {

matches:matches,

name:d.getName(),

url:d.getUrl()

} : null;

})

.filter (function (d) {

return d;

});

Logger.log(work.length + ' pages in site with hosting');

// write to a sheet

var ss = SpreadsheetApp.openById(sr.sheetId);

var sh = ss.getSheetByName(sr.sheetHosting);

// if if does exist, create it.

if (!sh) {

sh = ss.insertSheet(sr.sheetHosting);

}

// clear it

sh.clearContents();

// set up the data to write to a sheet

if (work.length) {

fiddler.setData (work.reduce(function(p,c) {

// blow out the records to one per match

Array.prototype.push.apply (p , c.matches.map(function(d,i) {

return {

name:c.name,

url:c.url,

index:i,

id:d.id,

match:d.match

};

}));

return p;

},[]).sort(function (a,b) {

return a.url > b.url ? 1 : ( a.url < b.url ? -1 : 0);

}))

.getRange(sh.getDataRange())

.setValues(fiddler.createValues());

Logger.log(fiddler.getData().length + ' hosting changes need to be made');

// lets also write a summary sheet of pages on the site that need to be worked

var sh = ss.getSheetByName(sr.sheetName);

// if if does exist, create it.

if (!sh) {

sh = ss.insertSheet(sr.sheetName);

}

// clear it

sh.clearContents();

fiddler.setData(work)

.mapRows(function (row) {

row.matches = row.matches.length;

return row;

})

.getRange(sh.getDataRange())

.setValues(fiddler.createValues());

}

}